The

Nikita

Noark 5 core project is implementing the Norwegian standard for

keeping an electronic archive of government documents.

The

Noark 5 standard document the requirement for data systems used by

the archives in the Norwegian government, and the Noark 5 web interface

specification document a REST web service for storing, searching and

retrieving documents and metadata in such archive. I've been involved

in the project since a few weeks before Christmas, when the Norwegian

Unix User Group

announced

it supported the project. I believe this is an important project,

and hope it can make it possible for the government archives in the

future to use free software to keep the archives we citizens depend

on. But as I do not hold such archive myself, personally my first use

case is to store and analyse public mail journal metadata published

from the government. I find it useful to have a clear use case in

mind when developing, to make sure the system scratches one of my

itches.

If you would like to help make sure there is a free software

alternatives for the archives, please join our IRC channel

(

#nikita on

irc.freenode.net) and

the

project mailing list.

When I got involved, the web service could store metadata about

documents. But a few weeks ago, a new milestone was reached when it

became possible to store full text documents too. Yesterday, I

completed an implementation of a command line tool

archive-pdf to upload a PDF file to the archive using this

API. The tool is very simple at the moment, and find existing

fonds, series and

files while asking the user to select which one to use if more than

one exist. Once a file is identified, the PDF is associated with the

file and uploaded, using the title extracted from the PDF itself. The

process is fairly similar to visiting the archive, opening a cabinet,

locating a file and storing a piece of paper in the archive. Here is

a test run directly after populating the database with test data using

our API tester:

~/src//noark5-tester$ ./archive-pdf mangelmelding/mangler.pdf

using arkiv: Title of the test fonds created 2017-03-18T23:49:32.103446

using arkivdel: Title of the test series created 2017-03-18T23:49:32.103446

0 - Title of the test case file created 2017-03-18T23:49:32.103446

1 - Title of the test file created 2017-03-18T23:49:32.103446

Select which mappe you want (or search term): 0

Uploading mangelmelding/mangler.pdf

PDF title: Mangler i spesifikasjonsdokumentet for NOARK 5 Tjenestegrensesnitt

File 2017/1: Title of the test case file created 2017-03-18T23:49:32.103446

~/src//noark5-tester$

You can see here how the fonds (arkiv) and serie (arkivdel) only had

one option, while the user need to choose which file (mappe) to use

among the two created by the API tester. The

archive-pdf

tool can be found in the git repository for the API tester.

In the project, I have been mostly working on

the API

tester so far, while getting to know the code base. The API

tester currently use

the HATEOAS links

to traverse the entire exposed service API and verify that the exposed

operations and objects match the specification, as well as trying to

create objects holding metadata and uploading a simple XML file to

store. The tester has proved very useful for finding flaws in our

implementation, as well as flaws in the reference site and the

specification.

The test document I uploaded is a summary of all the specification

defects we have collected so far while implementing the web service.

There are several unclear and conflicting parts of the specification,

and we have

started

writing down the questions we get from implementing it. We use a

format inspired by how

The

Austin Group collect defect reports for the POSIX standard with

their

instructions for the MANTIS defect tracker system, in lack of an official way to structure defect reports for Noark 5 (our first submitted defect report was a

request for a procedure for submitting defect reports :).

The Nikita project is implemented using Java and Spring, and is

fairly easy to get up and running using Docker containers for those

that want to test the current code base. The API tester is

implemented in Python.

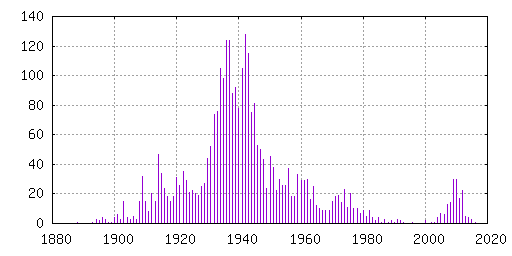

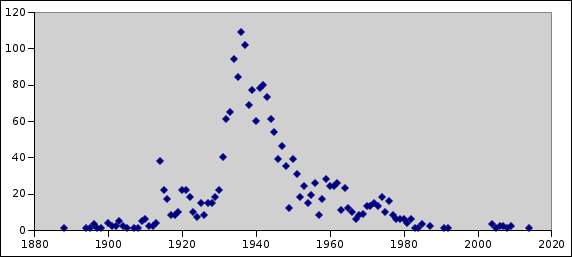

I've so far identified ten sources for IMDB title IDs for movies in

the public domain or with a free license. This is the statistics

reported when running 'make stats' in the git repository:

I've so far identified ten sources for IMDB title IDs for movies in

the public domain or with a free license. This is the statistics

reported when running 'make stats' in the git repository:

I expect the relative distribution of the remaining 3000 movies to

be similar.

If you want to help, and want to ensure Wikipedia can be used to

cross reference The Internet Archive and The Internet Movie Database,

please make sure entries like this are listed under the "External

links" heading on the Wikipedia article for the movie:

I expect the relative distribution of the remaining 3000 movies to

be similar.

If you want to help, and want to ensure Wikipedia can be used to

cross reference The Internet Archive and The Internet Movie Database,

please make sure entries like this are listed under the "External

links" heading on the Wikipedia article for the movie:

My monthly report covers a large part of what I have been doing in the free software world. I write it for

My monthly report covers a large part of what I have been doing in the free software world. I write it for  I finally received a copy of the Norwegian Bokm l edition of

"

I finally received a copy of the Norwegian Bokm l edition of

"